Metrics

cortex get

env api status up-to-date requested last update avg request 2XX

aws iris-classifier live 1 1 17m 24ms 1223

aws text-generator live 1 1 8m 180ms 433

aws image-classifier-resnet50 live 2 2 1h 32ms 1121126

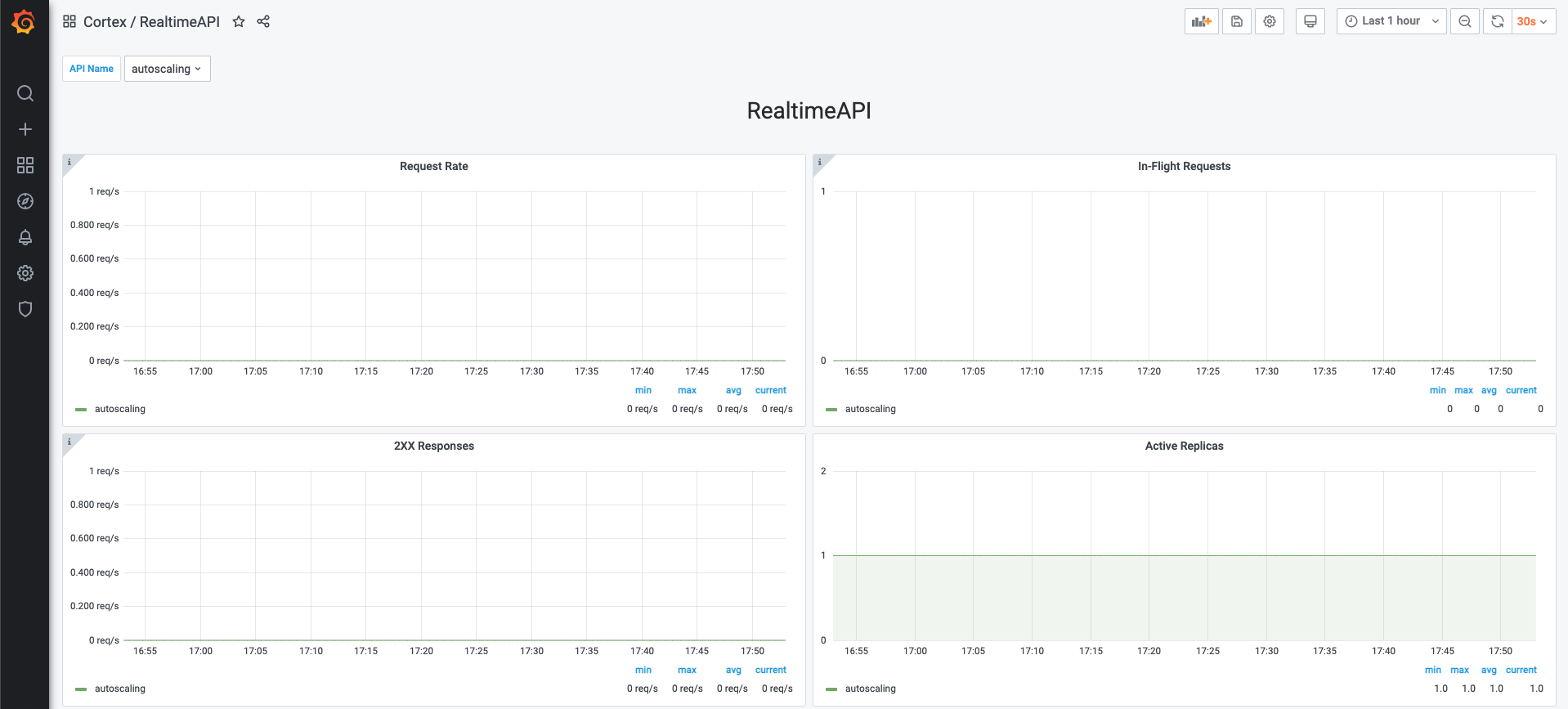

Metrics in the dashboard

Custom user metrics

Last updated